With the big announcement of new GPU ray-tracing hardware, Vlado explains what this breakthrough means for the future of rendering.

For nearly 20 years we've researched and implemented the highest end of photorealistic rendering with our ray-traced renderer V-Ray. Ray tracing is the most natural method to achieve true photorealism since it is based on the physical behavior of light. It's for this reason that the Academy of Motion Picture Arts and Sciences recognized our contribution to ray-traced rendering with a Sci-Tech Award for its widespread adoption in visual effects. A challenge with ray tracing, however, is that true photorealism takes a lot of computing power. We've always strived to make ray tracing faster, and ten years ago we began harnessing the power of GPUs in V-Ray. Now we're looking forward to leveraging new GPU hardware that is specifically designed for ray tracing calculations. What this means is that now we have the ability to do ray-traced rendering in real-time.

The announcement of NVIDIA’s upcoming Turing GPU and the RTX product line that will use it is an important milestone in the history of computer graphics and ray tracing in particular. The professional Quadro RTX series was announced at SIGGRAPH 2018 and the consumer GeForce RTX series was announced at Gamescom 2018. These new GPUs will include dedicated hardware (called an RT Core) for helping us accelerate our ray tracing solutions as well as greatly extending the availability and affordability of NVLink to double how much memory is available for your scenes. With the full line up announced, it’s worth spending a few minutes to understand what this means to your future rendering.

RT Cores within RTX Cards

Before we get to what the RT Cores provide, let’s briefly explain a few important things about ray tracing. The process of tracing a ray path through a scene can be generally split into two very distinct parts — ray casting and shading.

Ray Casting

Ray casting is the process of intersecting a ray with all the objects in the scene. Objects consist of different geometric primitives — triangles, curve segments (for hair), particles, etc. Objects may also be instanced across the scene. In a typical production scene, there may be many thousands of object instances that total hundreds of millions of unique geometric primitives. Intersecting a ray with all these primitives is a complex operation that involves advanced data structures like bounding volume hierarchies (BVH) that help to minimize the number of calculations.

Shading

Shading is the process of determining the appearance of an object — including calculation of texture maps and material properties — and the way the object reacts to light. Shading is also responsible for determining which particular rays to trace to compute the object’s appearance — these can be rays for shadows from light sources, reflections, global illumination, etc. In a production scene, shading networks can be quite complicated and may include the calculation of procedural textures, bitmap lookups, and various ways to combine them in order to determine the material properties of the surface — like its diffuse color, reflection strength and roughness, normal (through bump mapping), etc. Lighting calculations are also included here.

Depending on the amount of geometry in the scene and the complexity of the shaders, the balance between ray casting and shading can vary a lot. In typical scenes, ray casting may take as much as 80% for very simple scenes while heavy production scenes may only spend 20% of their time on it. The RTX graphics cards include specialized “RT Cores” to accelerate the ray casting process specifically. Since ray casting is a relatively complex algorithm, implementing it directly in hardware can lead to substantial speed increases. Note, however, that even if ray casting is infinitely fast and takes zero time, there is still the shading component of the ray tracing process, so speed increases from using the RT Cores will vary from scene to scene depending on how much time is spent on ray casting. In general, scenes with simple shaders and lots of geometry spend more time on ray casting and less time on shading and will benefit the most from the RT Cores. In contrast, scenes with complicated shading networks and lots of procedural textures, but relatively simple geometry, may see a smaller speed boost.

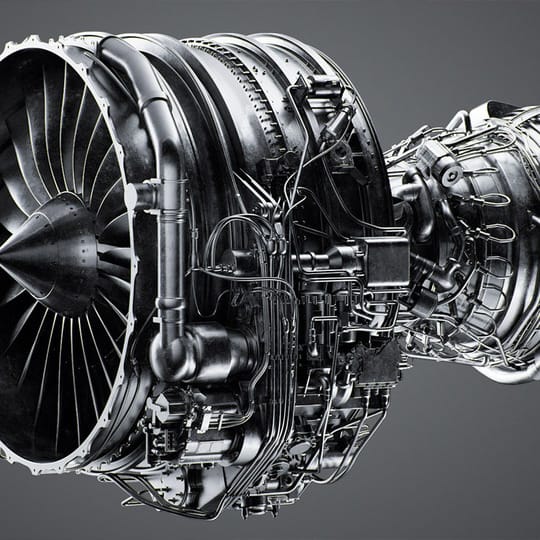

To illustrate the above points, we rendered the same scene with V-Ray GPU and with an experimental version that implements support for NVIDIA RTX. We rendered the same scene with a grey material override, and then again with the original materials. The scene has 95,668,638,333 triangles in total and was rendered with a fixed sampling rate of 512 samples per pixel.

Scene with a grey material override. In this case, about 76% of the time is spent in ray casting that will benefit from RT Cores.

Same scene with full materials. In this case about 59% of the time is spent in ray casting that will benefit from RT Cores.

While we are not quite ready to post performance results on unreleased NVIDIA hardware, we can give indications of what will benefit from RT Cores. The above scene was rendered on a pre-release version of the Turing hardware with pre-release drivers and an experimental modified version of V-Ray GPU where we could track our ray casting amount. With simpler shading, a bigger portion of the render time is spent on ray casting and we should see a larger benefit from RT Cores. We are also looking into ways to modify the way V-Ray GPU works in order to get the maximum performance from the new hardware. It should also be noted that the Turing hardware itself is significantly faster than the previous Pascal generation, even when running V-Ray GPU without any modifications.

It is important to note that applications must be explicitly programmed to take advantage of the RT Cores, meaning that existing ray tracing applications won’t benefit from them automatically. Those cores can be programmed through three publicly available APIs — NVIDIA OptiX, Microsoft DirectX (through the DXR extension), and Vulkan. DirectX and Vulkan are intended for use in games and real-time render engines whereas OptiX is best suited for production ray tracing as often found in offline renderers.

At Chaos Group, we have been working together with NVIDIA for nearly a year to research ways in which we can use the power of RT Cores within our products. V-Ray GPU is an obvious application of this technology and we already have experimental builds of V-Ray GPU using it — although optimizing the code and implementing full support for all features of V-Ray GPU will take some time. In the meantime, note that all recent releases of V-Ray GPU will work just fine with any RTX GPU, but will not take advantage of the RT Cores just yet. As we add support for RT Cores, V-Ray GPU will continue to support earlier cards as it always has.

In the video below, we show a version of V-Ray GPU modified to take advantage of the RT Core and demonstrating that material and geometry updates work fine after the modifications. The video is not designed to demonstrate performance - we will publish performance benchmarks in a separate blog post once the official hardware is released.

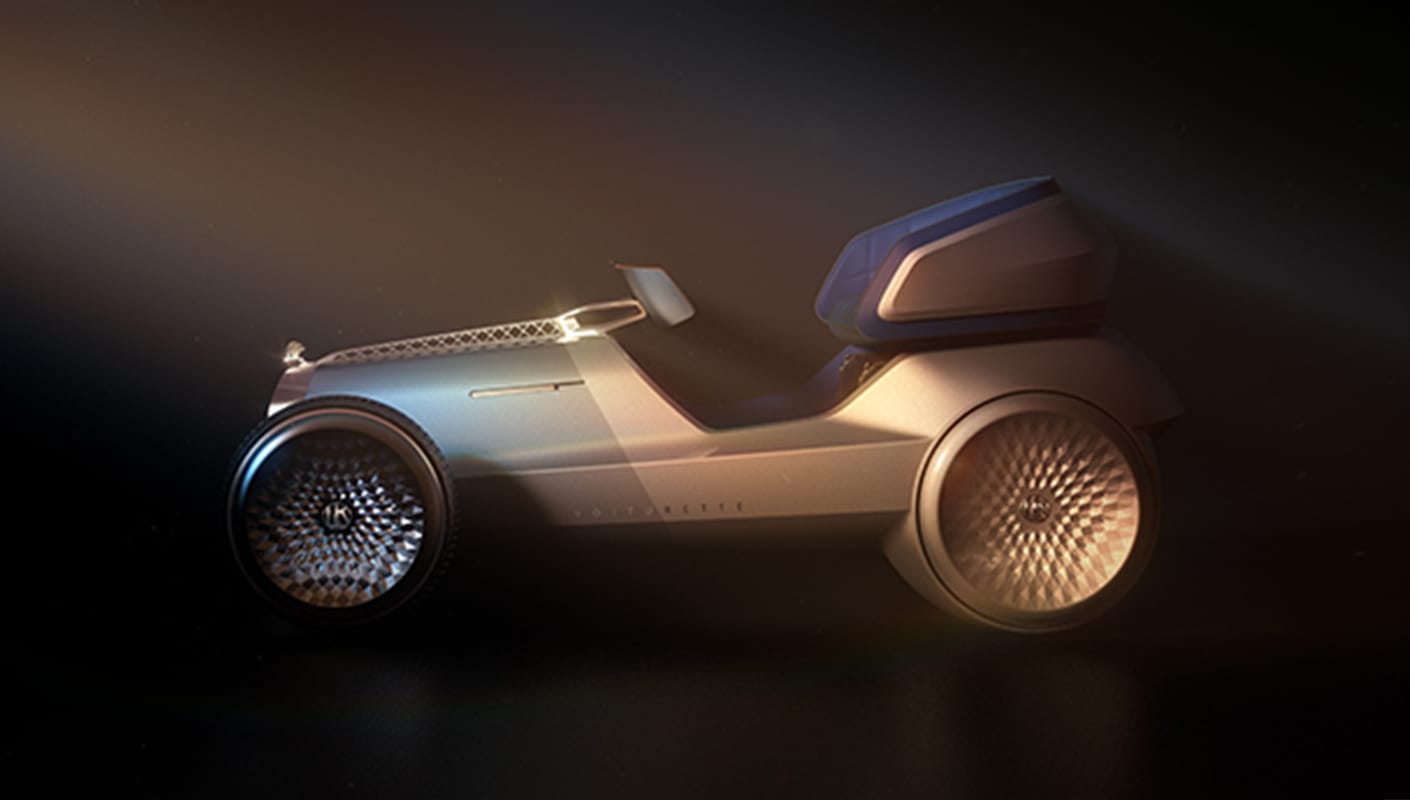

We have also explored using the RT Cores in the context of real-time ray tracing in our Project Lavina in order to determine the capabilities of the hardware. We were also interested in whether it is possible to completely replace rasterization with ray tracing for this use case. DXR was the first real-time API to publicly support RT Cores, so for the moment Project Lavina is based on it. We are also considering Vulkan in order to support the Linux operating system later on. The initial results are very promising and we intend to continue developing and improving this technology. Obviously it’s very early days; there is a significant amount of research being done right now on real-time path tracing and we expect that results will improve quickly in the following months, giving our users a new way to explore their scenes in a real-time environment without going through the tedious process of converting them for a real-time engine.

As always, our solutions are based on ray tracing exclusively — in contrast to existing game engines and DXR examples, which are only partially ray traced but still rely mainly on rasterization.

The RT Cores are only one part of the story though. The RTX graphics cards also support something called NVLink which doubles the memory available to V-Ray GPU for rendering with minimal impact to performance.

NVLink

NVLink is a technology that allows two or more GPUs to be connected with a bridge and share data extremely fast. This means that each GPU can access the memory of the other GPU and programs like V-Ray GPU can take advantage of that to render scenes that are too large to fit on a single card. Traditionally when rendering on multiple graphics cards, V-Ray GPU duplicates the data in the memory of each GPU, but with NVLink the VRAM can be pooled. For example, if we have two GPUs with 11GB of VRAM each and connected with NVLink, V-Ray GPU can use that to render scenes that take up to 22 GB. This is completely transparent to the user — V-Ray GPU automatically detects and uses NVLink when available. So, while in the past doubling your cards only allowed you to double your speed, now with NVLink you can also double your VRAM.

NVLink was introduced in 2016 and V-Ray GPU was the first renderer to support it officially in V-Ray 3.6 and newer versions. Until now, the technology has only been available on professional Quadro and Tesla cards, but with the release of the RTX series, NVLink is also available on gaming GPUs - specifically on the GeForce RTX 2080 and GeForce RTX 2080 Ti. Connecting two cards with NVLink requires a special NVLink connector, which is sold separately.

Conclusion

Specialized hardware for ray casting has been attempted in the past, but has been largely unsuccessful — partly because the shading and ray casting calculations are usually closely related and having them run on completely different hardware devices is not efficient. Having both processes running inside the same GPU is what makes the RTX architecture interesting. We expect that in the coming years the RTX series of GPUs will have a large impact on rendering and will firmly establish GPU ray tracing as a technique for producing computer generated images both for off-line and real-time rendering. We at Chaos Group are working hard to bring these new hardware advances in the hands of our users.