How do you create photorealistic versions of the world’s most well-known TV stars from scratch? We asked RealtimeUK to break down its epic video game cinematic.

Over the course of eight years and 73 episodes, Game of Thrones has gone from cult TV show to cultural phenomenon. Based on George RR Martin’s A Song of Ice and Fire, millions of people around the world are now tuning in to find out about the fate of the Starks, Lannisters and Targaryens as the series draws to a close.

Inspired by the TV series, Yoozoo Games’ strategy title Game of Thrones Winter is Coming invites fans to create a house, customize their castle and train an army to expand their territory throughout Westeros.

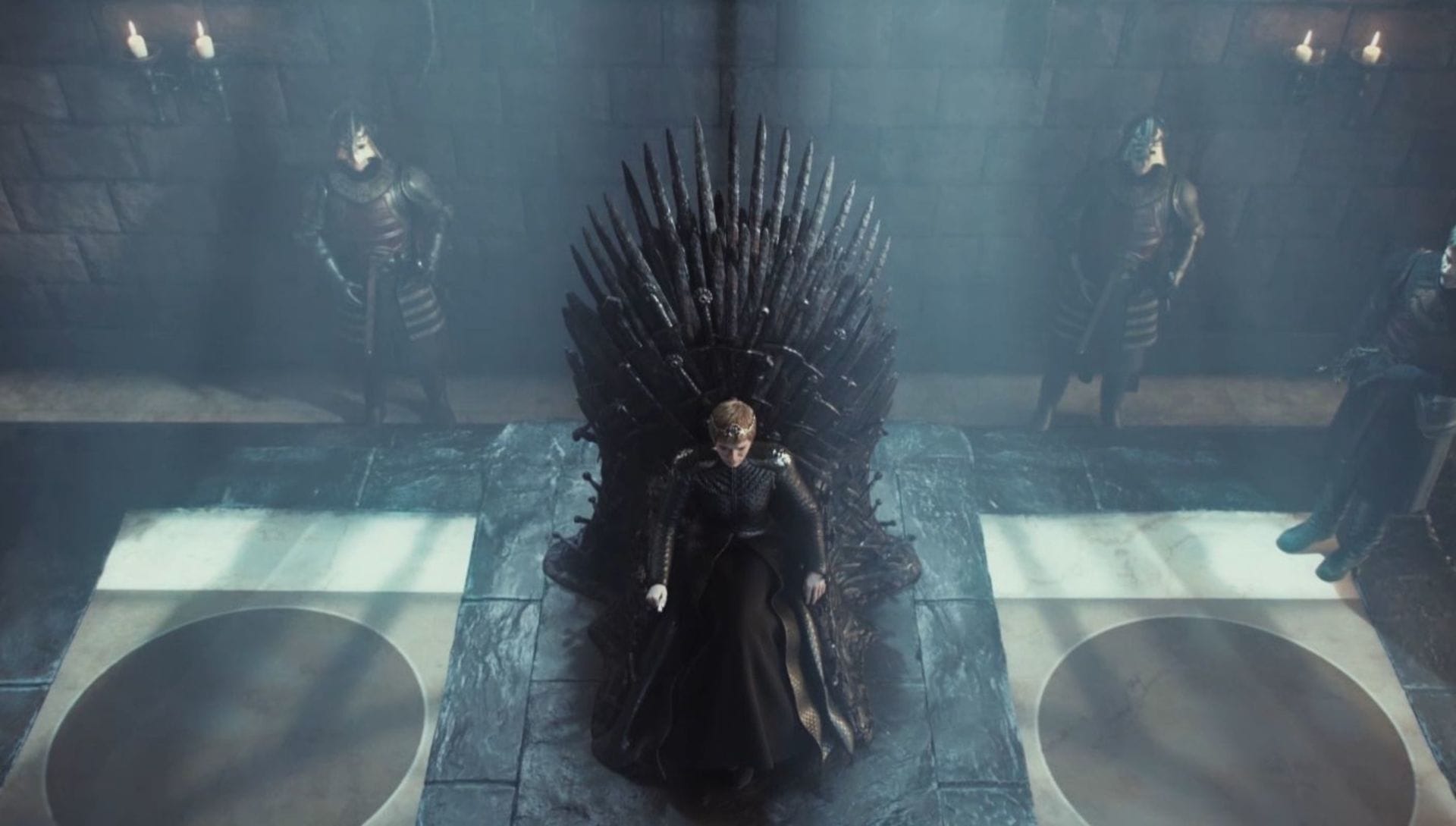

VFX studio RealtimeUK was tasked with creating a promotional video for the game. The firm’s already-incredible content has become consistently more ambitious; the Jurassic World Evolution trailer’s photorealistic dinosaurs have already racked up millions of hits on YouTube. But Game of Thrones Winter is Coming presented a new challenge for the team: creating photorealistic digital versions of the cast and using them to give an idea of the tension and conflict gamers can expect from the title.

Fresh off the trailer’s successful launch, we invited RealtimeUK’s Art Director Stu Bailey and his team to take a seat on the Chaos throne and answer a few questions.

Can we assume the RealtimeUK crew are big Game of Thrones fans?

We are all massive fans of the show, so this project was a dream to work on. But that came with huge pressure, as we know how well-known and loved the characters are all over the world. We had to nail the quality and create a truly authentic Game of Thrones experience for the viewer.

How did this project come about?

We were approached by developer Yoozoo Games and it just so happens that they had acquired the use of the Game of Thrones intellectual property (IP). They needed a studio to create a truly authentic representation of the Game of Thrones universe, and they felt a UK studio would already have a grasp on the aesthetic. They wanted a studio that had experience working with a Chinese developer and had previously been trusted on a Hollywood IP – and since we’d just produced Jurassic World Evolution for Tencent, it all fell into place.

How much creative freedom and direction did you have?

From a creative perspective, we had free reign; the only constraints came from the game developer. The brief was to create an introduction to the main houses featured in the game that did not feature any battle action but conveyed the notion that “winter is coming.” Throughout the process, every asset and idea had to be presented to HBO to ensure it aligned with the IP. We presented the idea of ice growing over the surface of the animal sigils that represented the houses as a way of illustrating the impending battles — maybe it was just a coincidence they used that mechanic for the first TV spot also!

What were the challenges in creating the photorealistic characters?

This was the first time we were tasked with creating CG versions of well-known characters — and there is nothing like jumping in at the deep end! And to top off the complexity of the task, we knew from the start that we would not have access to the actors or any scan data. So, the search for reference began...

The client provided some good onset photography, which was especially helpful for the clothing, and the whole team scoured the web for materials. The main challenge that arises from using multiple sources of reference is variations in camera lenses and lighting; it’s amazing how these two factors completely change the perception of form in the human face. We would always find the camera lens data in any reference and do test renders with CG cameras to match, but we found that still left us short of finding the characters instantly recognizable.

After multiple iterations, the amazing character modeling and look dev team had created these great assets but we found we lost the likeness in the shots from the animatic. We then realized we would have to go back and adjust all the lenses and camera positions to mimic the cinematography used in the TV show, as that was the only way we could get an instant read on the character we had been looking for. This was the tipping point for when the whole project came to life.

How did you build the environments?

We were lucky in terms of references — we just had to watch the show!

After gathering all references, we were able to break down all the scenes from the animatic into smaller pieces such as a pillar, chandelier, chair, and then assign them to the artist. At RealtimeUK, one artist will make an asset from tip to toe, whereas bigger companies divide between artists by job phase: modeling, texturing or shading. In most cases, modeling has been done in 3ds Max and texturing in Substance Painter. And sometimes we use Mari for bigger surfaces where it’s required to easily paint over different UDIMs.

Tweaking the materials for the final render is always easier in 3ds Max than relying on the output of Substance only. V-Ray’s interactive rendering was a great help here!

To be able to work on one scene by more than one artist, we referenced all elements of the actual set into one scene, except the walls and grounds. This was a combination of XRef objects and scenes and V-Ray proxies. This way it was easier to handle bigger, more complex scenes while maintaining the ability to update them with the latest changes. Plus, the hardware footprint of the scene was much friendlier.

Certain assets needed special attention because the traditional workflow would have been too tedious or wouldn’t have provided a good enough result in the given amount of time. For example, instead of traditional modeling or sculpting for the candles, we used particles to get a realistic base which was then polished to get a great final result.

V-Ray’s support of Cryptomatte was critical in allowing us to control and fine-tune every element of the shots in post-production.

Stu Bayley, RealtimeUK

Did you make use of any other new or useful V-Ray features?

V-Ray’s support of Cryptomatte was critical in allowing us to control and fine-tune every element of the shots in post-production. And the progressive renderer was useful in achieving quick local renders before sending to the farm. We also used V-Ray’s IPR to help with setting up lights and making sure the shadows were cast exactly where we wanted them to be.

How has the trailer been received?

The trailer has been phenomenally well received from clients all around the world and has attracted a huge amount of attention. A lot of people have expressed surprise that we hadn’t used photogrammetry and that the characters were, in fact, sculpted by our team using ZBrush. The clients themselves were especially delighted with the end result!

What are you working on right now?

We’re working on multiple game trailers for AAA clients as well as expanding into the TV and film market. There are some big things in the pipeline and we can’t wait to share more with you!

Discover RealtimeUK’s upcoming projects at the company's official site.