Real-time rendering makes it possible to explore complex, photorealistic 3D projects instantly. Ricardo Ortiz guides us through this exciting new world.

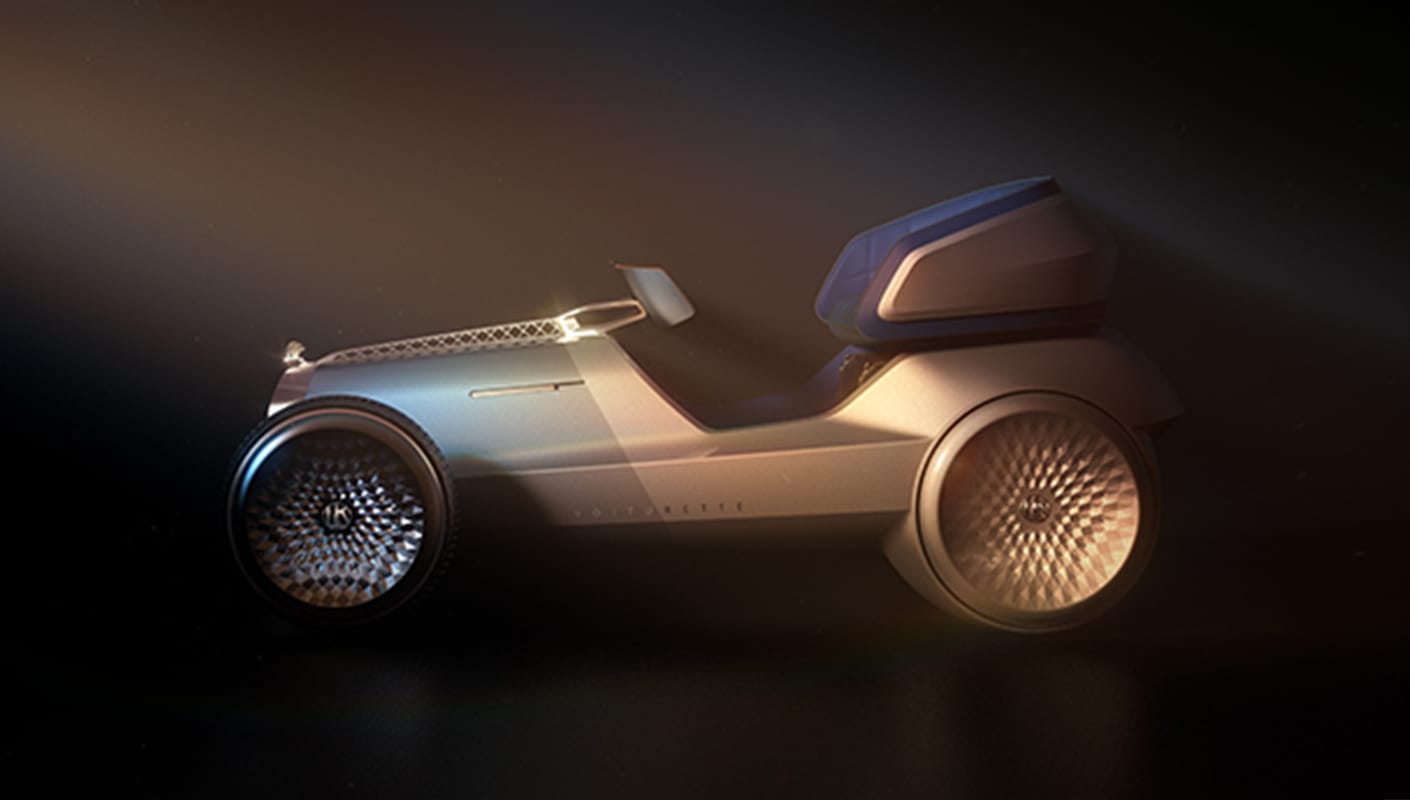

Architects, designers, and 3D artists have dreamed of real-time ray-traced graphics for many years. Being able to explore, interact with and change a scene in real-time can shorten workflows, accelerate the time to finished shot, and make it easier for clients to understand and manipulate even your most complex designs.

This article will explore the incredible technological developments that have made real-time rendering a possibility today, as well as the challenges developers have faced to create these unique experiences.

What is real-time rendering?

In my previous article, What is 3D rendering—a guide to 3D visualization, we reviewed the two methodologies that allow us to create 3D images with a computer: ray tracing and rasterization. To recap:

- Ray tracing generates an image by tracing rays of light from a camera through a virtual plane of pixels and simulating the effects of its encounters with objects.

- Rasterization creates objects on the screen from a mesh of virtual triangles, which create 3D models of objects.

Each has its strengths: ray tracing is designed for high-quality photorealistic visualization while rasterization prioritizes interactive performance. The logical question for anyone working in the CG industry is, is there a way to have both benefits simultaneously: the quality of ray tracing with the speed and interaction of real-time rendering? The answer is yes!

To understand how photoreal real-time rendering works, we need to delve into the two phases involved in the calculation of a 3D scene by physically accurate renderers: ray casting and shading.

What is ray casting?

As its name suggests, ray casting is the process of intersecting a ray of light with all objects in the scene. In a large scene, there can be millions of pieces of geometry and billions of polygons. This complex operation must be structured under specific algorithms to reduce the calculation time.

How does shading work?

Shading determines the appearance of an object through the way it reacts to light. The shading process also defines the rays that provide information about shadows, reflections, global illumination. In a large scene, the shading networks are very important. If they are complex, the evaluations can become computationally expensive.

Finding the balance

The amount of geometry and the complexity of the shaders can alter the balance between ray casting and shading. For example, in a scene with a single simple material applied to millions of polygons, the ray casting process represents 90% of the rendering time. In contrast, in scenes with many complicated materials but less geometry, ray casting can take up only 20% of the evaluation time, but the shading process will take up the rest.

Both these processes demand hardware and software that are powerful and efficient. Fortunately, we are in the middle of a technological revolution thanks to an industry that is constantly evolving: video games.

Game engines

Video game engines have provided us with the ability to experience computer graphics in real-time through rasterization techniques, accelerated by the development of graphic processing units (GPUs). Found in popular consoles and gaming PCs, these chips can power spectacular graphics in 4K and 8K resolutions on super-widescreen displays and VR headsets.

We can make use of this computational power with a GPU that can render even our most complex projects in real-time, such as NVIDIA’s RTX products, which feature dedicated ray tracing (RT) cores to speed up the processes of ray casting and shading.

NVIDIA OptiX

Ray tracing is a complex algorithm that requires specialist programming to take advantage of the benefits of RT cores. V-Ray uses NVIDIA OptiX, which has already become common in rendering engines.

NVIDIA OptiX offers many advantages for the ray tracing processes. It allows us to scale multiple GPUs to increase computing power, combine GPU memory through NVLink technology, and evaluate huge scenes. In addition, one of its most important features is an AI-accelerated denoiser that eliminates image noise generated by the rendering process and reduces render iterations.

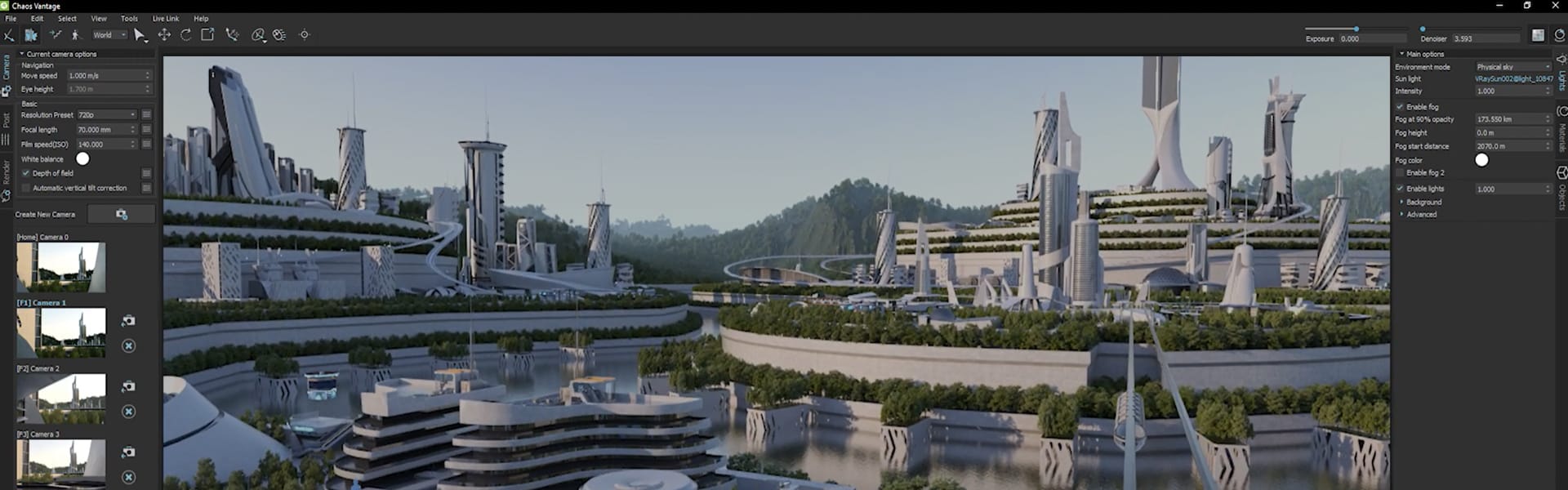

Thanks to this incredible hardware, we can experience real-time with a high level of realism. And, with the right real-time rendering software, such as Chaos Vantage, architects, designers and artists can seamlessly and intuitively explore their 3D projects.

In my next article, we’ll discover how Chaos’ software taps into real-time rendering hardware. But if you can’t wait, you can download and use Chaos Vantage right away.